With the recent article on CRN about HP considering the acquisition of Simplivity, http://www.crn.com/news/data-center/300073066/sources-hewlett-packard-in-talks-to-acquire-hyper-converged-infrastructure-startup-simplivity.htm, it seems a good time to look at what simplivity does, and why they are an attractive acquisition target.

In order to answer both questions, we need to look at history. First was the mainframe. That was great, but inflexible, so we moved to distributed computing. This was substantially better, and brought us into the new way of computing, but there was a great deal of waste. Virtualization came along and enabled us to get higher utilization rates on our systems, but this required an incredible amount of design work up front, and it allowed the siloed IT department to proliferate since it did not force anyone to learn a skillset outside their own particular area of expertice. This lead us to converged infrastructure, a concept that if you could get everything from a single vendor, or support from a single vendor at the very least. Finally came the converged system, it provided a single vendor/support solution, packaged as one system, and we used it to grow the infrastructure based on performance or capacity. It was largely inflexible, by design, but it was simple to scale, and predictible.

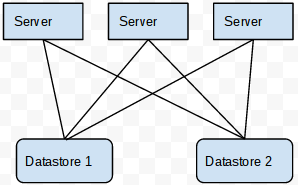

To solve this problem, companies started working on the concept of Hyper-Convergence. Basically there were smaller discrete converged systems, many of which created high availability zones not through redundant hardware in each node, but through clustering. The software lived on each discrete converged node, and it was good. Compute, Network, and Storage, all scaling out in pre-defined small discrete nodes, enabling capacity planning, and fewer IT administrators to manage larger environments. Truly Software Defined Data Center, but at a scale that could start small and grow organically.

Why then is this interesting for a company like HP? As always I am not an insider, I have no information that is not public, I am engaging in speculation, based on what I am seeing in the industry. Looking at HP’s Converged Systems strategy, looking at what the market is doing, I believe that in the near future, the larger players in this space will look to converged systems as the way to sell. Hyper-convergence is a way forward to address the market space that is either too small, or needing something that traditional converged systems cannot provide. Hyper-convergence can provide a complimentary product to existing converged systems, and will round out solutions in many datacenters.

Hyper-Convergence is a part of the inevitable evolution of technology, whether HP ends up purchasing simplivity, these types of conversations show that such concepts are starting to pickup steam. It is time for companies to innovate or die, and this is a perfect opportunity for an already great company to keep moving forward.